AI Insights: Edge AI – The Next Big Thing in Machine Learning

Introduction

AI has mostly lived in the cloud — massive models trained and deployed on centralized servers. But as devices around us get smarter, there’s a shift happening: Edge AI. Instead of sending data back and forth to the cloud, AI models now run directly on edge devices like smartphones, cameras, sensors, and even IoT appliances.

This means faster decisions, more privacy, and lower costs. From self-driving cars to smart assistants, Edge AI is quickly becoming the backbone of real-time intelligence.

What is Edge AI?

Edge AI = AI + Edge Computing

- Edge Computing: Processing data locally on devices instead of relying on cloud servers.

- AI Models: Lightweight versions of deep learning models designed to run efficiently on limited hardware.

Together, they allow real-time AI predictions on-device without heavy reliance on internet connectivity.

Why Edge AI is Game-Changing

- Low Latency – Decisions made instantly on the device (think autonomous cars avoiding collisions).

- Cost Efficiency – Less cloud bandwidth and storage needed.

- Privacy & Security – Sensitive data (like medical or facial recognition) doesn’t need to leave the device.

- Offline Functionality – Works even with poor or no connectivity.

- Energy Efficiency – Optimized models consume less power than constantly transmitting data.

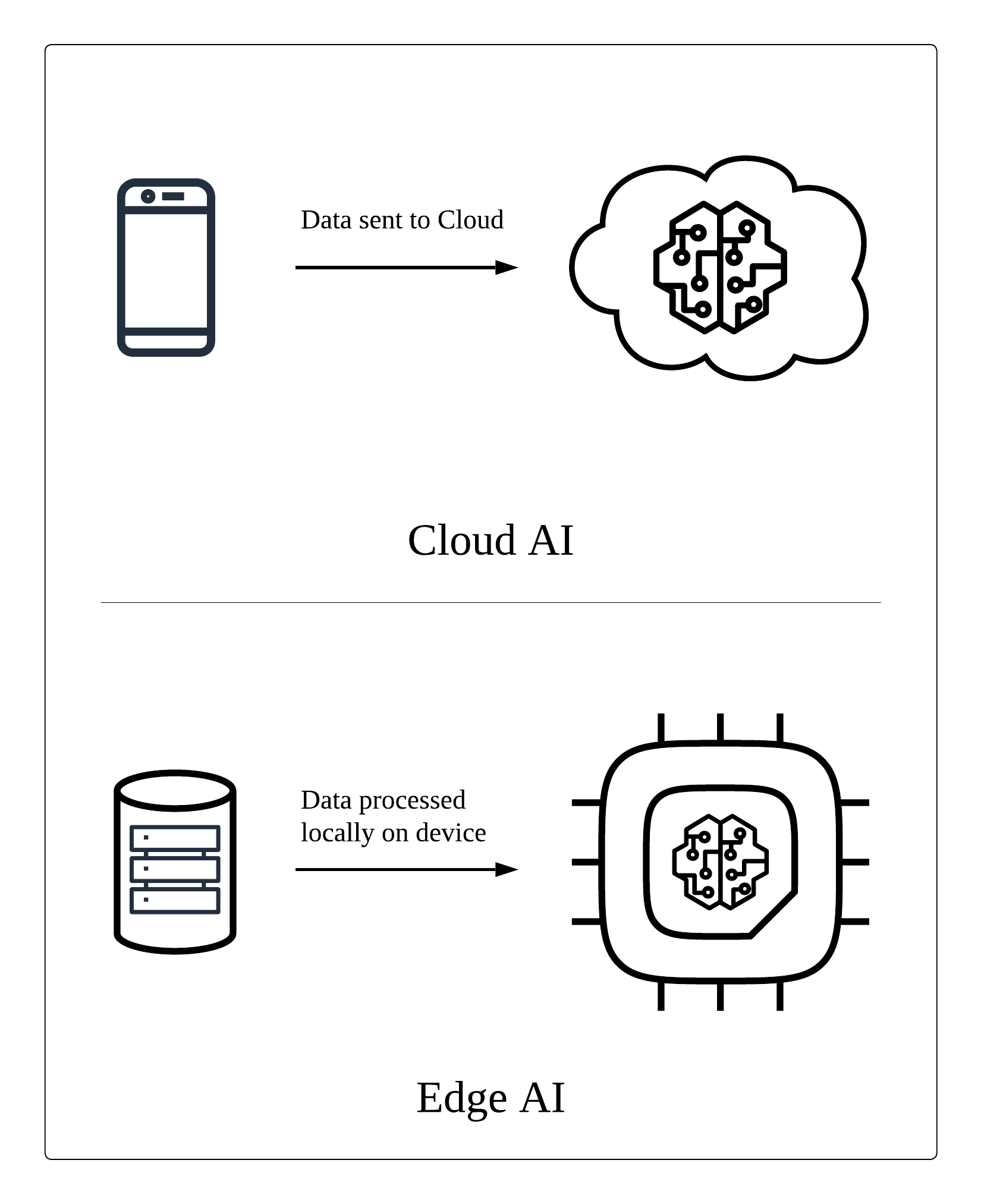

Cloud AI vs Edge AI: Key Differences

Figure: Cloud AI vs Edge AI

Real-World Use Cases

- Healthcare: Wearables analyzing patient vitals in real-time.

- Retail: Smart checkout systems powered by on-device vision AI.

- Automotive: Self-driving systems making split-second decisions.

- Agriculture: Drones detecting crop health in remote farms.

- Smartphones & IoT: Voice assistants and home automation without cloud dependence.

Tools & Frameworks Powering Edge AI

- TensorFlow Lite → Deploy ML models on mobile and IoT.

- PyTorch Mobile → Lightweight PyTorch for Android/iOS.

- ONNX Runtime → Cross-platform, optimized inference.

- AWS Panorama / Greengrass → AWS services for running ML at the edge.

- NVIDIA Jetson → Edge AI hardware powerhouse.

Challenges Ahead

- Model Compression: Shrinking large neural nets without losing accuracy.

- Hardware Constraints: Limited memory and power on edge devices.

- Security Risks: Local models can be reverse-engineered if not protected.

- Standardization: Diverse devices need common frameworks and protocols.

The Future of Edge AI

Edge AI isn’t just an add-on to cloud AI — it’s a parallel movement. As 5G, tinyML, and specialized AI chips grow, more intelligence will move closer to where data is generated.

Tomorrow’s devices won’t just collect data — they’ll think, decide, and act in real time.

Pro Tip for Developers

If you’re already into AI/ML, start experimenting with TensorFlow Lite or PyTorch Mobile. Running models on-device will put you ahead in one of the fastest-growing AI frontiers.

No comments yet. Be the first to comment!